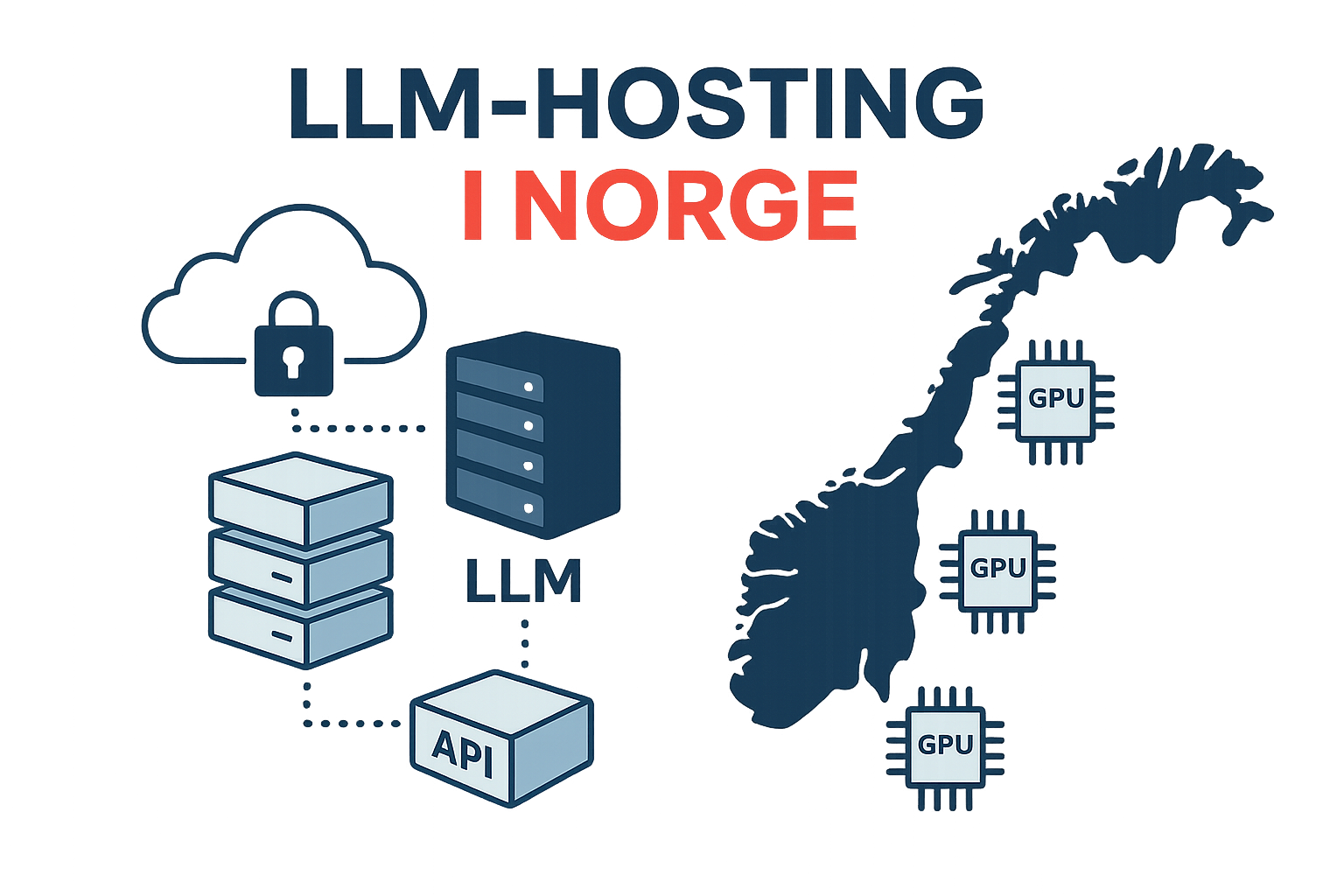

Norwegian LLM Hosting

Norwegian “ChatGPT” for your sensitive data.

Run open-source Large Language Models in Norwegian data centers with full control over performance, data residency, and cost. Our LLM hosting platform is built on top of our high-performance cloud servers and GPU infrastructure, giving you predictable latency, strong data protection, and full API-based automation.

Use a llama.cpp-compatible API endpoint to serve a wide range of open models, while keeping your data within Norway and under your governance and compliance framework.

| Norwegian data residency | LLMs run in Norwegian data centers, supporting NIS2-aligned security and local compliance requirements. |

| llama.cpp compatible API | Use a familiar HTTP API to run open models, integrate with existing applications, and deploy new services quickly. |

| Support for open models | Host popular open-source LLMs (e.g. Llama, Open AI OSS, Mistral and other models from the open AI ecosystem). |

| GPU-optimized infrastructure | Leverage dedicated GPU resources for high-throughput inference and low-latency responses. |

| Full automation | Provision, scale and monitor models through APIs and managed services, integrated with your existing DevOps workflows |